Making Competency-based Assessment Work: Coherence & Rigor

To me, there are no two concepts more important to the structure of a competency-based assessment system than coherence and rigor. And yet, most curricula and school reform models only pay them lip service. The first section of this post frames ways that secondary schools have mislabeled rigor. Unfortunately, these missteps can wreak havoc on the arc of a single discipline, like science, over several years. The second section explores what EduChange did to resurrect rigor via coherent systems design while building the competency-based assessment structures for our Integrated Science Program.

Perversions of Rigor

When I began to learn about the program of studies in secondary science in a small city in Massachusetts, a program I was hired to ‘reform,’ I saw various perversions of academic rigor that I would uncover again and again for the next two decades.

One perversion is the well-documented process of segregating students by tracking them into Honors, College Prep, Alternative and other designations.

The sorting process often begins in Grades 7-8, particularly in math classes, which then seems to dictate science course tracks. This always amuses me, since we spend endless hours at EduChange incorporating applied math into our curriculum because students need more practice.

In truth, most of the so-called honors math students at any school I’ve worked with seem to be unable to walk down the hall to science class and remember how to do unit conversions or basic dimensional analysis.

Tracking is usually based on:

—grades, which are inherently inaccurate and inconsistent across teachers;

—teacher recommendations, which are inherently biased;

—and sometimes standardized entrance exams for high schools, which psychometrically include very large margins of error, and may even stunt intellectual development (research forthcoming!).

Tracking is a well-known perversion of rigor and negates the growth model supported by competency-based assessment. Schools cannot track students and employ competency-based assessment without creating conflicting messages, pathways and incentives within their assessment system.

The next perversion of rigor plagues entire secondary programs and involves early transitions to upper/high school.

There is a steep slope of academic difficulty in Grade 9 and sometimes Grade 8, often traversing too much ground across too many skill sets and concepts in too short a timeframe.

The wildly inaccurate message sent to students is that rigor is a function of volume: the total number of pages of reading assigned, the length of the required research paper, the number of problems to solve.

Harder teachers assign more work.

Schools that house Honors and College Prep students in the same classroom justify the weighted status by adding more work to the higher level.

Students willing to lose sleep, fight through the anxiety, and demonstrate compliance on every level are rewarded. They learn to tolerate the volume and they carry the load throughout high school.

Unfortunately, this message about rigor is a lie.

To whomever needs to hear this: doing more work per unit time may increase one’s efficiency, but has nothing to do with rigor.

Rigor is more aptly characterized by the negotiation of complexity; the analysis and evaluation of diverse viewpoints using evidence; problem-solving with empathy in the face of uncertainty; or designing for optimization.

In addition to lying to students about how well it prepares them for post-secondary endeavors, the volume-as-rigor approach fails to build lifelong learning dispositions by perverting the very process of learning itself.

Instead, the approach serves as the next sorting mechanism because students’ survival of this transition period proves they “can handle” AP or IB/DP courses later in high school. I find there is truth to this, but not because these advanced courses are more authentically rigorous.

First-year undergraduate courses, particularly in STEM, share the goal of weeding out “unfit” students, and the process is more ruthless at the university level. As you might imagine, many of the students deemed “unfit” for STEM majors are females and persons of color.

You can read a summary of how I worked with my Massachusetts colleagues to address rigor-as-volume and other programmatic issues back in the late nineties. I wish I could say that those issues no longer exist, but our methods back then could easily apply to many schools today.

A third programmatic perversion of rigor occurs later in high school.

Authentic rigor plateaus after Grade 10, and students who successfully negotiated the rites of passage in Grades 8-10 are insufficiently challenged in Grades 11-12.

This phenomenon exists even in AP and advanced classes, where the volume of work rather than developmentally-appropriate intellectual challenge continues to drive curricula.

Students were sorted for what purpose, then? To do more of the same, just in different courses?

There is nothing more indicative of the factory model of schooling than this slog of low-grade task completion for it’s own sake. And the perpetuation of homogeneity cannot possibly serve diverse populations.

I truly believe this plateau is partly responsible for the erasure of critical thinking gains by the end of high school documented by researchers.

Unfortunately, this upper-secondary plateau has persisted for so long, and is so veiled by the prestige of the exam courses society craves, that it becomes a lightning rod in conversation.

Speaking from personal experience, it is impossible to question the rigor of IB/DP, AP or favorite teacher-designed electives in Grades 11-12 without incurring the wrath of many adults. Teachers, parents & administrators believe these courses represent the highest level of achievement at their school, and point only to the standardized exam as evidence of rigor. The College Board, university admissions departments, and tiger moms have marketed AP courses with unmatched efficacy: 359,120 students took AP exams in 1990 compared to 2,825,710 students in 2019, for a 687% gain.

Oddly, adults justify the rigor of the course in schools where student scores are consistently high, and where they are consistently low.

And the lack of an AP designation in a given subject at a given school effectively devalues the subject matter for older students. In our crumbling democracy, it is no coincidence that Civics carries no Honors or AP weight. Many students destined for Ivy League schools “placed out” of banal courses like Civics and Economics.

Despite persistent protests by champions of the AP Program, my own review and observations of student work, teacher-designed tasks, chosen texts, and observations of the day-to-day classroom experience in over 1100 classrooms across Grades 8-12, point to a rigor plateau in the final two years of high school.

In addition to these longitudinal data, my article shares additional data on STEM readiness of USA high school students. This tragic missed opportunity is a prime motivator for EduChange’s assessment design work.

We’ll let you know when the next post is ready for you!

Resurrecting Rigor Through Coherence

Coherence is not standardization, nor is it a free-for-all.

Coherent systems contain very distinct parts that remain stable, in our case the sequence of overall modules and our master rubrics.

Coherent systems also include flexible parts, which we have built into day-to-day classroom decision making, inclusive technology integration, disciplinary literacy, task rubrics and the diverse ways that students can achieve success via our assessment system.

Over the years, some teachers have disregarded the stable components, and of course the system falls apart for them.

Teachers are most familiar with the Pinterest approach to curriculum development because they are not provided with high-quality resources. They must fend for themselves in—guess what?—too little time to design for coherence! How can anyone expect teachers to find curricular stability when planning must be done in fits and starts during evenings and weekends?

Feeling an intentionally-designed system evolve in your own classroom, and making modifications that jive rather than fight with it, is not intuitive for teachers. But within our curricular immersion, professional development support with an instructional coach provides authentic on-the-job training rather than pull-out PD workshops. And colleagues form an authentic professional learning community where diverse pathways are honored—just as they are with students.

We designed our way out of this inequitable, ineffective mess by building eight coherent systems that interface to complement each other so that a diverse range of students and teachers can grow authentically through rigorous, anxiety-free classroom experiences.

And we designed all of it to help trapped schools break free from the ties that bind.

I’ve written a bit about the content system here and here, and about different tenets of our assessment system in the four posts prior to this one. Now I’ll describe our designs for multi-year coherence, and how they support Vygotsky-esque incremental increases in rigor.

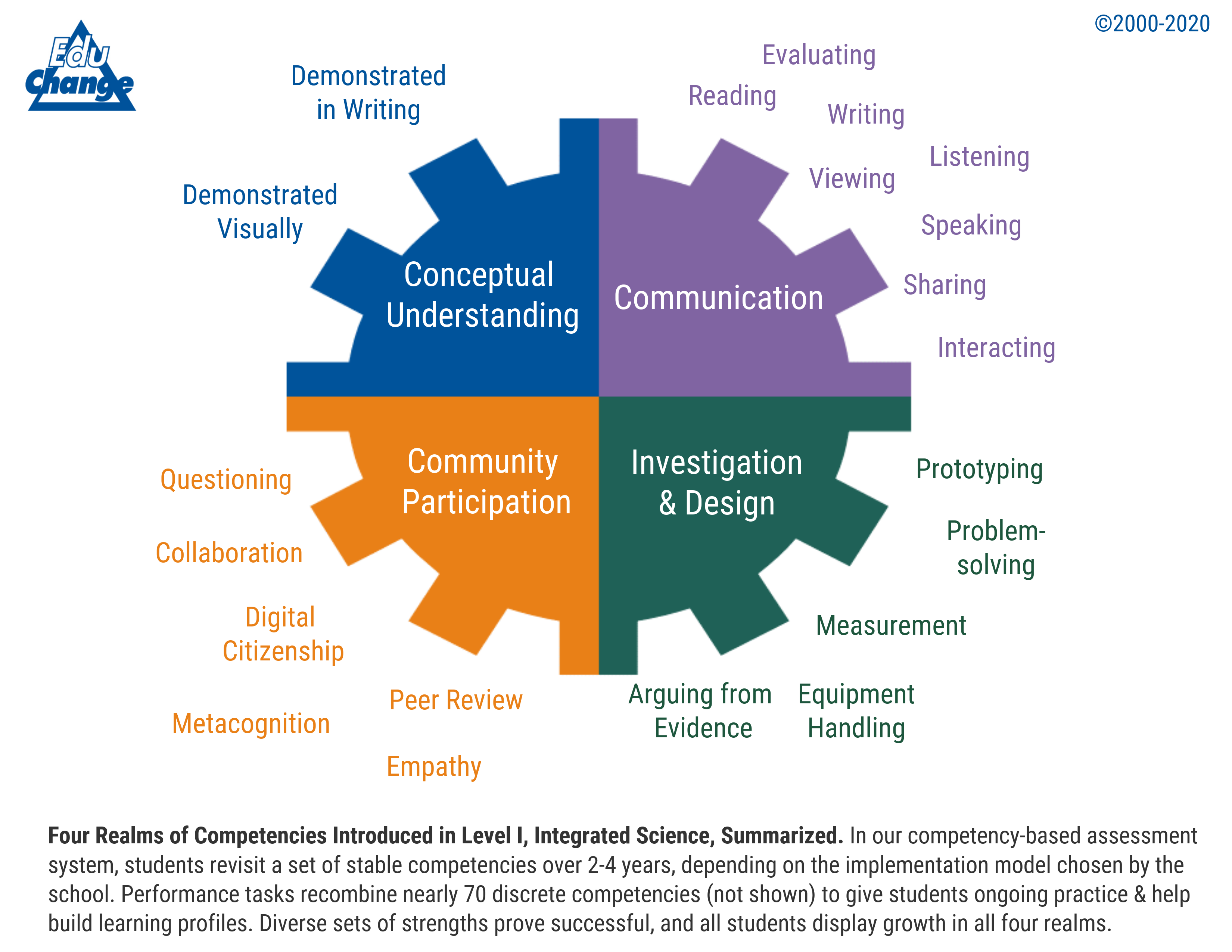

EduChange employs five master rubrics, four of which are used in Level I and one that is added in Level II. Every performance task is built using these rubrics and only these rubrics.

No specific content is used in master or task rubrics. This summary image gives you a sense of how these four Level I realms apply to any content. It’s one reason why ~15% of our task and content designs may be updated annually.

There are just over 70 discrete competencies that may be recombined to build task rubrics. It’s the right amount to ensure that students who are assessed in a balanced fashion can build learning profiles for each discrete competency over four years (i.e., many more do not fit into the science class time available in four years).

Multi-year coherence is rare in secondary programs, mainly due to one-year-one-subject conventions. We have realized enormous learning benefits by opening up disciplinary architecture over 2-4 years.

Revisiting concepts, skills and dispositions in different contexts helps students appreciate their strengths, gain practice where they are less confident, and prepare to articulate specific, desirable competencies to future professors, employers or commanding officers. Here are the messages our assessment designs send to students.

It is critical to understand that all components of our program are designed in concert. We do not design in a linear, one-and-done fashion.

This is a very different design process than most one-grant-one-module endeavors. Even if those same authors build more modules, their resulting sets almost never build sequentially or systematically to create something greater than the sum of its parts. We believe coherent, growth-based learning systems should do just that.

Growth is a journey, and students experience the program in a sequence as they progress through school. Along the way, we ensure that students experience an ever-changing zone of proximal development. This prevents long-term plateaus and intellectual stagnation.

We architect rigor in our performance tasks by gradually increasing:

—the complexity of the texts, problems, laboratory materials, or scenarios we use;

—the number of competencies students must demonstrate;

—the number of realms (across the master rubrics) that students must navigate;

—the time it takes to complete the task (accommodations notwithstanding);

—the prior knowledge and skills that students are expected to marshal; and

—the degree of student autonomy and subsequent responsibility for completion.

Students feel task rigor ratchet up alongside the coherence of the stable rubrics, which in turn gives them confidence to trust their growth. If that is not the point of competency-based assessment, I’m not sure what is.

Ultimately, students take charge of their own developmentally-appropriate STEM project, conduct it while engaging in a series of peer review opportunities, and produce a complete scientific paper.

We have partnered with JEI, an organization that hosts a free, online journal for middle and high school students, replete with a team of Ph.D. candidates in different specialties who peer review the manuscript and offer feedback prior to publication. Unlike a singular capstone moment, students may steer their own projects toward publication whenever they feel ready, and may do so more than once. This supports diverse learning journeys by design.

Nature offers millions of examples of the power of systemic diversity. In the next post, I’ll explore how the practice of biomimicry supports equitable assessment designs for diverse learners…

Suggested Citation: Saldutti, C. (2020, January 4). Making competency-based assessment work: coherence and rigor. 1-2-3 Learn: EduChange Design Blog. https://educhange.com/coherenceandrigor/